Political Commissar Ruan | Senior engineer of Huawei

[Abstract]] the rapid development of AI technology puts forward higher requirements for data storage systems. Massive training data, high-frequency model iteration and low-latency reasoning are driving the storage media in capacity, performance, key dimensions such as security and energy efficiency continue to evolve. This article will take storage media as the core to systematically analyze how its technology evolution path can effectively cope with the data storage challenges in the AI era.

As an important "fuel" of Artificial Intelligence, the scale and quality of data directly determine the Intelligence level of AI, and continuously drive AI models to accelerate the access to AGI(Artificial General Intelligence).

As a solid base for the whole AI process, data storage is responsible for data storage, transfer and circulation, providing support for training and reasoning of large models. EMERGING AI application scenarios require higher data storage capacity, data processing speed, mobility, and security.

As a key component of data storage devices and the underlying engine of the new generation of productivity revolution, storage media is undergoing key technological changes. In this intelligent revolution, what challenges and directions will storage media face?

I. AI in scenarios, storage media faces four major challenges in the entire business process.

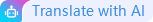

The whole business process of AI big model mainly includes four phases: data collection, data preprocessing, model training, and inference deployment. The operation tasks and storage media requirements of each phase are different.

The author analyzes the entire business process in AI scenarios and summarizes it as follows:

• data collection and data preprocessing: a single disk capacity challenge

the collection of unstructured multi-modal datasets brings the rapid growth of the original datasets to a scale of hundreds of PB, resulting in a sharp increase in the amount of data. When the local disk capacity of the data preprocessing server is small, it needs to be completed in multiple batches, the data preprocessing cycle is long; The medium and long-term data growth requires high storage scalability. Due to the small capacity of a single disk and the limited storage capacity of a single frame, a single computing cluster connects to multiple storage clusters, resulting in a large and complex storage cluster, difficult operation and maintenance.

• model Training: data read/write efficiency challenges

most of the training datasets are small files with hundreds of millions of levels. These large amounts of small files need to be loaded quickly to reduce the waiting for valuable computing resources of GPU, NPU, and TPU, this brings high requirements on the random read/write capability (IOPS/TB) of storage media. To ensure the consistency of large model training and avoid retraining, CKPT(Checkpoint) needs to be saved and loaded frequently, this brings high requirements on the read/write bandwidth of storage media.

• model Inference: latency and bandwidth challenges

taking the offline inference of Resnet50 model as an example, a single A100 GPU card needs to process 68994 images per second, and a single P computing power needs a bandwidth of 14 Gb/s, which requires the storage medium to have the same high bandwidth capability; edge Inference Service latency is sensitive (Internet recommend <30ms), which requires low storage medium latency and fast vector retrieval speed to quickly respond to edge service requirements; KV Cache occupies large memory, you need to uninstall the disk. In the decode phase of AI inference, the hit KV Cache is required to be quickly loaded to respond to user problems. Therefore, the bandwidth of the disk is extremely high.

• full process: reliability and security challenges

private data sets in some key industries, such as government medical care, are at risk of sensitive information disclosure and ransomware attacks. It is recommended to take full consideration of data encryption, data security, and reliability at the storage medium level.

• full process: big model energy consumption challenge

AI big model is a new "energy consumption monster". According to public data, training GPT-3 consumes 1.287 GWh of electricity, which is equivalent to the electricity consumption of 120 American families in one year. From the perspective of storage media, we can consider using more energy-efficient SSD disks. At the same time, we can improve the single Die density and bit density of SSD disks and reduce the overall energy consumption and TCO.

Single Die density: Currently, the specifications are 512GB and 1TB. In the future, there will be 2TB and 4TB. The higher the density of a single Die, the larger the disk capacity.

bit density: The bit data that a single storage unit can store, which are SLC(1bit/cell), MLC(2bits/cell), TLC(3bits/cell), QLC(4bits/cell).

To sum up, the storage media challenges in AI scenarios can be summarized as capacity challenges, performance challenges, security challenges, and energy efficiency challenges. In response to the storage requirements of these business processes, the author believes that collaboration is needed from these four key dimensions of storage media.

II. AI development Trend of storage media in the era

the challenges brought by AI drive the development of storage media in the direction of larger capacity, higher performance, lower power consumption and higher security.

1, large Capacity: storage of EB-level datasets, ideal for AI services

from the initial SLC and MLC to the present TLC and QLC, the Flash memory particle technology continues to develop, and the number of NAND particles also continues to increase. In the future, NAND Flash will break through to 300 layers, the storage capacity is also significantly increased. With the breakthrough of 3D NAND technology, the capacity of SSD disks using QLC media is increasing significantly. It will evolve to 128TB and 256TB in the future, and even realize the capacity of 1PB per disk will no longer be a dream.

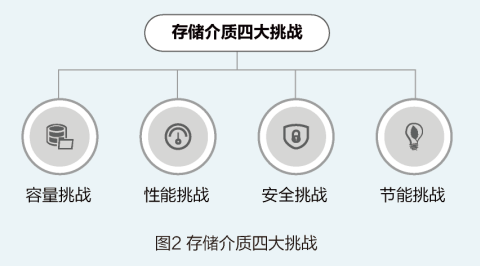

For example, DapuStor, Memblaze, and Micron have all released PCIe5.0 30.72TB TLC with a read bandwidth of 14 Gb/s and a write bandwidth of 10 Gb/s. In terms of large capacity QLC, Solidigm is the leader. The maximum capacity of QLC SSD using 192-layer 3D NAND technology has reached 61.44TB(D5-P5336), with sequential read performance of 7 Gb/s and sequential write performance of 3 Gb/s; 122.88TB QLC has been mass produced in the first half of 2025, with sequential read performance of 7 Gb/s, the sequential write performance reaches 3 Gb/s, but the FTL(Flash Translation Layer) size is 32kB. The domestic manufacturer, puwei, also launched 61.44TB SSD(J5060) based on QLC media, currently, QLC focuses on PCIe4.0, and more powerful PCIe5.0 QLC disks will be released in the future.

Compared with TLC SSDs, QLC SSDs have the same data reading performance, but reduce energy consumption and space usage, making QLC SSDs more suitable for read-intensive AI inference scenarios, such as CDN and OLAP databases, it is an ideal choice for AI business. AI applications have shifted from training to reasoning, facilitating the transfer of storage requirements to localization. To meet more customization requirements, SSD with higher performance and larger capacity will be introduced. It is reported that SKHynix is developing a 300TB ultra-large SSD to meet AI requirements and reduce the overall TCO of the data center.

2, excellent performance: provides high performance and low latency to accelerate AI business operation.

Due to the limitation of front-end protocol and back-end channel rate, SSD performance cannot linearly increase with capacity. Granular bandwidth has increased tenfold in 5 years, while channel bandwidth has only increased tenfold in 10 years. AI services have high requirements on SSD performance in terms of small file loading and large file reading, aiming to reduce the waiting time of GPU, NPU/TPU and shorten the commercial landing time of large models.

The front-end interface protocol changes from PCIe3.0 and PCIe4.0 to PCIe5.0. Compared with PCIe4.0, the performance of PCIe5.0-based SSDs is doubled.

Many mainstream SSD manufacturers, such as SKHynix, Micron, Huawei, and DapuStor, have mass production of PCIe5.0 SSDs. For example, PS1010 produced by SKHynix has a sequential read performance of 15000MB/s and a sequential write performance of 10200MB/s.

On the other hand, the development of CXL also provides a possibility for faster and more flexible data transmission solutions. Currently, it has evolved to 3.0 with a transmission rate of 64GT/s. CXL connects devices to CPUs and separates storage and computing. At the same time, CXL allows CPU to access larger memory pools on connected devices with low latency and high bandwidth, breaking through the limits of traditional DDR channels, thus expanding memory capacity and bandwidth. For Cache scenarios with extremely high performance requirements, such as the AI big model inference KV Cache scenario, which requires extreme bandwidth performance, you can use CXL disks to speed up data loading. Samsung has introduced a memory CMM-D based on CXL protocol, which can realize seamless integration with the existing DIMM and increase the bandwidth by up to 100%.

3, green and Low Carbon: storage is efficient and energy-saving, creating a green data center

worldwide, energy conservation and emission reduction have become a common mission, and all walks of life are actively pursuing the goals of "carbon peak" and "carbon neutralization. AI business, as a power-swallowing monster, consumes a lot of power resources from the establishment of a data center to the operation of the business. The storage energy consumption of a data center accounts for up to 35%, data centers have changed from computing power competition to energy competition. SSD has the characteristics of high density, high reliability, low latency and low energy consumption. In the AI era, SSD has become an inevitable trend to replace HDD. By deploying all-flash SSDs on a large scale, the energy consumption of AI computing power centers can be greatly reduced, achieving green energy conservation and sustainable development.

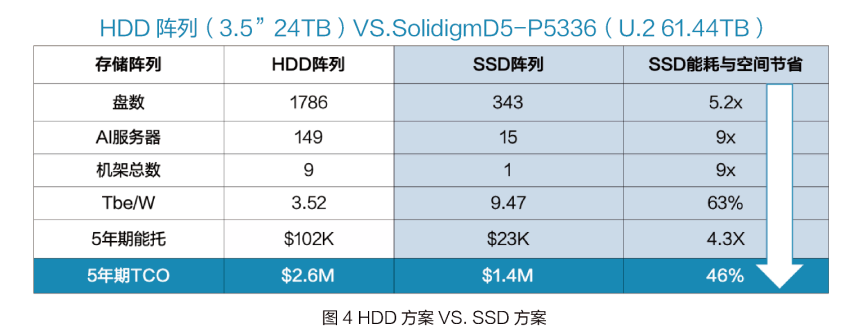

Many CSP manufacturers in North America have built large data centers, such as xAI. The QLC dashboard using Solidigm has been deployed to build AI data lakes and reduce the TCO of data centers. The following example uses a 10PB storage solution built in a domestic cloud to compare the energy consumption of HDD and SSD, as shown in Figure 4:

from the above comparison, we can see:

(1) to meet the requirement of high IOPS in AI scenarios, the server is configured with 149 HDD hard disks, which greatly increases the number of storage hard disks compared with SSD configuration, but at the same time, it also brings about an increase in TCO (total cost of ownership);

(2) compared with HDD, SSD has better power density, which can bring huge cost savings and 46% TCO savings in 5 years.

4. Security and reliability: the storage medium is endogenous and secure to protect core data assets.

In the era of AI big models, the reliability of data determines the accuracy of big models. Industry data is mostly private data, which is an important data asset and also the most vulnerable value asset. New types of security attacks such as ransomware, poisoning and theft threaten the reliability of big model training data and the accuracy of results at all times, and bring serious economic losses. For example, in March 2023, big Meta models were leaked, and similar big models such as Alpaca, ChatLLama, ColossalChat, FreedomGPT appeared one after another in the following week. Meta was forced to announce open source, and the previous investment was in vain, heavy losses. From the perspective of storage media, some manufacturers in the industry have been exploring security measures at the media layer, such as extracting behavior patterns by analyzing IO operations on SSD disks; Aggregating feature analysis on multiple disks, the ML model is used to detect abnormal behaviors in the engine to prevent ransomware.

III. Conclusion

the rapid development of AI technology is accelerating the evolution of storage media in four major directions: larger capacity, higher performance, stronger security and better energy consumption. Facing the challenges of data storage in the AI era, storage media technology is facing a critical breakthrough period. Only by continuing innovation and breaking through the existing technological bottleneck can we provide a solid base support for the AI era and win the first-mover advantage of intelligent development.

* This article is included in the second issue of the user special issue of "words and numbers"